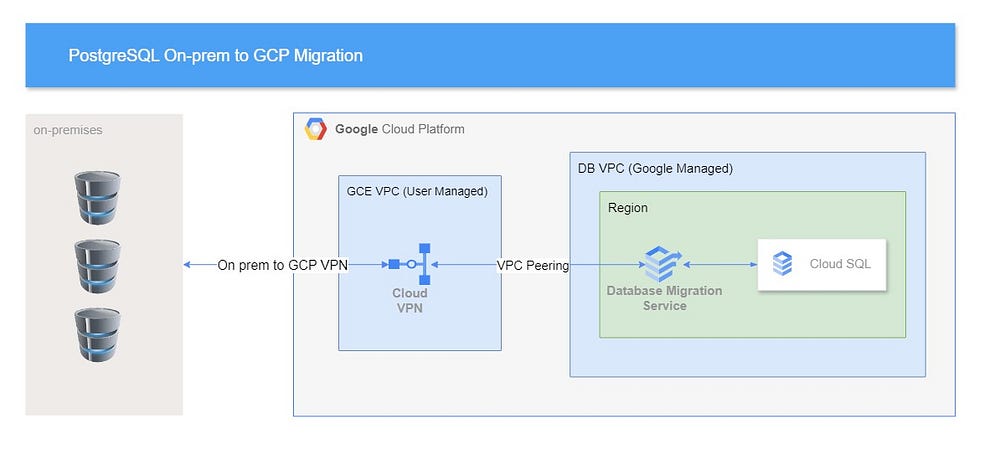

PSQL On-prem to GCP Migration

As businesses scale, cloud migration becomes a vital step in modernizing

their infrastructure. Migrating databases like PostgreSQL from on-premises

to Google Cloud Platform (GCP) offers benefits like scalability, security,

and managed services. In this article, we’ll explore how to migrate your

PostgreSQL database from an on-prem setup to GCP, using Google Cloud SQL

and Google’s Database Migration Service (DMS)

Why Migrate PostgreSQL to GCP?

Google Cloud offers a fully managed PostgreSQL solution called Cloud SQL,

which simplifies database management, supports automated backups, and

ensures high availability. The migration process is streamlined using

Google’s Database Migration Service (DMS), which offers continuous

replication with minimal downtime.

Key Features of GCP for PostgreSQL Migration

- Cloud SQL for PostgreSQL: Fully managed, scalable PostgreSQL with automated backups, high availability, and built-in security features.

- Google DMS: Simplifies migrations with minimal downtime by supporting PostgreSQL, MySQL, and SQL Server.

- Security: Data is encrypted during transit and at rest, with options for SSL/TLS and VPN tunnels for additional security.

Security

Data Encryption:

- Data is protected during migration. Database Migration Service supports multiple secure, private connectivity methods to protect your data in transit.

- Use SSL/TLS to secure data in transit between the on-premises database and the GCP environment.

- This ensures all data transmitted over the internet during the migration process is encrypted.

- Once migrated, all data is encrypted by default, and Google Cloud databases provide multiple layers of security to meet even the most stringent requirements.

VPN Configuration:

- Configure a VPN tunnel between on-premises infrastructure and GCP to establish a secure and private connection over the public internet.

- Use Google Cloud VPN, supporting both site-to-site IPsec VPN connections and Cloud Interconnect for higher bandwidth and reliability.

- Ensure the VPN configuration adheres to best practices for encryption algorithms and key management.

Access Controls:

- Implement stringent access controls to limit who can initiate and manage the database migration process.

- Use Identity and Access Management (IAM) roles and permissions to ensure only authorized personnel have the necessary access.

- Employ multi-factor authentication (MFA) for an added layer of security.

Network Security:

- Utilize firewall rules and security policies to restrict inbound and outbound traffic to necessary ports and IP addresses involved in the migration.

- Use Virtual Private Cloud (VPC) firewall rules on GCP to control traffic flow and implement network segmentation.

Policy and Performance Considerations:

- Check with the security administrator whether company policy forbids data transfers over the public internet.

- Large-scale data transfers might negatively impact the performance of the production network.

Types of migration

Continuous migration

Continuous migration involves a continuous flow of changes from a source

to a destination following an initial full dump and load. Once the

destination is ready, a promote operation is performed to make it the

primary database.

Process:

- Take an initial snapshot of the source database (brief lockout).

- Load the snapshot into the destination.

- Recreate constraints (primary keys, foreign keys, indexes) on the destination.

- Process ongoing changes (CDC) from source to destination.

- When ready, stop writes to the source and promote the destination to the primary database.

Pros:

- Minimal Downtime: The source can continue to accept writes during most of the migration process, reducing overall downtime.

- Real-time Data Sync: Ongoing changes are continuously captured and applied to the destination, ensuring up-to-date data.

- Gradual Transition: Allows for a more seamless switch over to the new database with less impact on applications.

Cons:

- Complex Setup: Requires more configuration and monitoring to ensure continuous data capture and replication.

- Replication Delay: There might be a lag between the source and destination, leading to potential data consistency issues during the final promotion.

- Resource Intensive: Continuous replication can consume more resources, affecting performance.

One-time migration

One-time migration is a single point-in-time snapshot of the database

taken from the source and applied to the destination. The destination

becomes the primary database once the load is completed.

Process:

- Stop writes to the source database.

- Take a dump of the source database.

- Load the dump into the destination.

- Once the load is complete, automatically promote the destination to the primary database.

Pros:

- Simpler Process: Easier to set up and manage compared to continuous migration.

- No Replication Lag: Ensures consistency since the migration is done in one go without ongoing changes.

- Less Resource Intensive: Does not require continuous replication, reducing resource usage.

Cons:

- Higher Downtime: Requires stopping writes to the source database, leading to potential downtime for dependent applications.

- Data Freshness: Data is only as fresh as the last snapshot, so any changes after the dump won’t be captured until the next snapshot.

- Risk of Data Loss: If there are any issues during the migration, it could result in data loss or corruption without the ability to continuously sync.

Use Cases

Continuous Migration:

- Ideal for mission-critical applications where downtime must be minimized.

- Suitable for large databases where a gradual and controlled transition is necessary.

- Useful when the source database must remain operational for writes during most of the migration process.

One-Time Migration:

- Suitable for smaller databases or less critical applications where downtime is acceptable.

- Ideal when a simple and quick migration process is preferred.

- Appropriate when the source database can be easily stopped for the duration of the migration.

Migration Flow

1. Preparation and Prerequisites

Before initiating the migration, ensure the following prerequisites are

met:

- Install necessary extensions such as pglogical on your source PostgreSQL database to facilitate continuous replication.

- Set up a secure connection between your on-prem environment and GCP using Google Cloud VPN.

- Learn more about VPN setup on GCP

- Evaluate your on-premises environment to ensure it meets compatibility requirements for Cloud SQL.

2. Select the Appropriate Migration Type

There are two common types of migration strategies for PostgreSQL

databases on GCP:

Continuous Migration: This involves continuous replication between your

on-prem database and Cloud SQL.

- Pros: Minimal downtime and real-time data sync.

- Cons: More complex to configure and resource-intensive.

One-Time Migration: A single snapshot of your database is migrated to GCP

in one go.

- Pros: Simpler and less resource-intensive.

- Cons: Requires stopping database writes during migration, leading to higher downtime.

3. Configuring Connectivity

Ensure secure, encrypted connectivity between your on-prem database and

GCP. Configure:

- VPN: Set up a VPN tunnel to securely connect your on-prem environment to GCP.

- VPC Peering: Set up VPC peering for network connectivity between your on-prem and GCP environments.

- VPC Peering setup guide

4. Create Connection Profiles

Using Google Cloud Database Migration Service (DMS), create connection

profiles for both the source (on-prem) and destination (Cloud SQL).

- Define the source as your on-prem PostgreSQL.

- Define the destination as Cloud SQL for PostgreSQL.

- Test the connectivity to ensure everything is properly configured.

5. Initiate the Migration Job

Once the profiles are created, initiate the migration job:

- Choose the connectivity method (VPN or VPC Peering).

- Configure and select the replication type (continuous or one-time).

- Test the migration job before full execution to ensure that configurations are correct.

- More on configuring migration jobs

6. Monitor the Migration Process

Google’s DMS offers robust monitoring tools that provide real-time

updates on your migration’s progress. Monitor the ongoing migration for

potential issues like network latency, resource constraints, or

replication lag.

- Use tools to monitor row counts and data consistency.

- Set up cascading read replicas for testing, allowing you to validate the migration without affecting production systems.

- Monitor your migration

7. Promote the Replica to Primary

Once the migration is complete, promote the GCP Cloud SQL instance to

your primary database. Ensure that you stop all writes to the source

database before performing this step.

Cascading read replicas for a Cloud SQL

Database Migration Service lets you migrate data to a Cloud SQL

destination instance, and then set up cascading read replicas for that

instance. Cascading read replicas let you create a read replica under a

Cloud SQL read replica in the same or different region before promoting

your instance to primary.

Data Validation

After migration, it’s crucial to validate that the data in the Cloud SQL

instance matches your on-prem database. This involves:

- Row Counts Comparison: Ensure that the number of rows matches between the source and the destination.

- Checksum Verification: Generate checksums on both the source and target to verify data integrity.

- Data validation techniques

Storage Transfer Service uses metadata available from the source storage

system, such as checksums and file sizes, to ensure that data written to

Cloud Storage is the same data read from the source.

If the checksum metadata on the source storage system indicates that the

data Storage Transfer Service received doesn’t match the source data, the

Storage Transfer Service records a failure for the transfer

operation.

Schema and Data Integrity Testing

Run functional tests to verify that all relationships and constraints

within the application are intact post-migration. This can be done

by:

Row Counts Comparison

Ensure that the number of rows in each table matches between the source

and destination databases. Execute the following query for each

table:

SELECT COUNT(*) FROM <table_name>;

Checksum Verification

This verifies that the data content is identical between the source and

destination databases. Generate checksums for each table. This can be done

using functions like pg_checksums or custom scripts:

SELECT md5(string_agg(t::text, '')) FROM (SELECT * FROM

<table_name> ORDER BY <primary_key>) t;

Data Sampling and Comparison

Ensure that sampled data points are identical in both databases. Randomly

select a sample of rows from each table:

SELECT * FROM <table_name> ORDER BY RANDOM() LIMIT

<sample_size>;

What Isn’t Migrated by Google DMS

Google’s DMS supports a wide variety of data migrations, but there are

several key elements that are not migrated automatically. Understanding

these exclusions can help you prepare the necessary manual steps to ensure

a smooth transition:

- Large Objects (LOBs): PostgreSQL’s logical decoding does not support replicating large objects. Rows referencing large objects are replicated, but the large object itself is not, meaning attempts to access them on the destination will fail.

- Tables without Primary Keys: For tables that lack primary keys, Google DMS can only replicate the initial snapshot and INSERT operations. Any UPDATE or DELETE statements must be manually migrated.

- Materialized Views: Only the schema of materialized views is migrated. The data within these views is not copied over. To populate them after migration, you must manually execute a REFRESH MATERIALIZED VIEW command.

- SEQUENCE States: The SEQUENCE states, such as last_value, may differ between the source and destination after migration. This difference must be addressed post-migration to maintain continuity in the sequence generation.

- Custom Tablespaces: Customized tablespaces are not migrated. All data is moved to the default pg_default tablespace in Cloud SQL.

- User Accounts: DMS does not migrate user accounts. To add users to a Cloud SQL instance, this must be done manually through the Google Cloud console or a PostgreSQL client.

Known Limitations of Google DMS

Google DMS, while powerful, has certain limitations that affect the

migration process:

- DDL Replication: Data Definition Language (DDL) changes, such as table structure modifications, are not replicated by default. To propagate DDL changes, users need to utilize the pglogical.replicate_ddl_command function. DDL changes must be manually synchronized between the source and destination databases.

- Unlogged and Temporary Tables: Neither unlogged nor temporary tables are supported for replication in Google DMS.

- Encryption Issues: If source databases are encrypted with customer-managed keys that DMS cannot access, the migration process will fail. However, if the data is encrypted using the pgcrypto extension, it can be migrated successfully.

- Writable Destination: The destination database remains writable during migration, allowing for DDL changes. However, altering database configurations or table structures during this phase can disrupt the migration process or compromise data integrity.

- Materialized Views: Materialized views require manual refreshing after migration. This extra step is often necessary to ensure the migrated database is fully functional.

Common Causes of Data Migration Issues

Even with a well-structured migration plan, certain issues may arise due

to various environmental or technical factors. Being aware of these common

causes of migration problems can help you mitigate risks and ensure a

successful migration:

- Network or Connectivity Problems: Network instability or insufficient bandwidth can slow data transfer or cause connectivity failures between on-premises systems and Google Cloud. This is especially critical for continuous migrations where real-time synchronization is essential.

- Data Format or Encoding Errors: Incompatible data formats or incorrect character encoding between the source and destination databases can lead to migration failures or corrupted data. Ensure that both environments are using compatible formats before starting the migration.

- Resource Limitations: Insufficient disk space, CPU, or memory on either the source or destination systems can result in slow migrations or failed processes. Planning for resource allocation is especially important for large databases that demand significant bandwidth and compute power.

- Permissions and Access Control Issues: Misconfigured service accounts or IAM roles can prevent DMS from accessing source or target databases. It’s crucial to ensure that the correct permissions are granted to the accounts managing the migration.

Conclusion

Migrating databases from on-premises infrastructure to Google Cloud

Platform (GCP) using Google Database Migration Service (DMS) offers

organizations a robust and secure solution to modernize their database

environments. The process simplifies the transfer of various database

workloads, such as MySQL, PostgreSQL, and Oracle, to GCP services like

Cloud SQL and AlloyDB. Continuous data replication and minimal downtime

during the migration ensure smooth operations for mission-critical

applications.

Security is paramount, with GCP providing end-to-end encryption for data

in transit and at rest, while VPN and firewall configurations further

safeguard the migration process. Access control measures, including IAM

roles and multi-factor authentication (MFA), help secure the entire

migration pipeline.

Two migration methods — continuous and one-time — offer flexibility based

on the size and criticality of the databases. While continuous migration

minimizes downtime and ensures real-time synchronization, it can be

resource-intensive and complex to manage. In contrast, one-time migration

is simpler and more suitable for less critical applications but involves

longer downtime.

In addition to the technical execution, organizations must carefully

consider policy and performance implications, such as security

restrictions on data transfers and the potential impact of large-scale

data movements on network performance. By adhering to GCP’s best practices

for network security, data validation, and application-level testing,

organizations can ensure a seamless and secure migration with minimized

risks.

Comments

Post a Comment